Using MCP Prompts

We've discussed so far how to add tools to your MCP server.

But something I haven't seen discussed a lot online is the fact you can use an MCP server to provide prompts to users.

This lets you use MCP as a prompt directory - a collection of prompts that users can pull in to achieve their goals quickly.

I'm going to show you one of my favorite prompt templates that I used in the creation of this article.

The Code

Let's start with our boilerplate code: the server and the transport:

import { McpServer } from "@modelcontextprotocol/sdk/server/mcp.js";import { StdioServerTransport } from "@modelcontextprotocol/sdk/server/stdio.js";const server = new McpServer({name: "Prompt Directory",version: "1.0.0",});// ...more code in hereconst transport = new StdioServerTransport();await server.connect(transport);

This server is going to house a cleanTranscription prompt. I record most of my articles via dictation, and this prompt helps me clean up mistakes in the transcription.

Scrollycoding

Let's start by adding a prompt to the server. This has a very similar syntax to tool. But instead of server.tool, we'll call server.prompt:

Next we'll add a description to describe what the tool actually does.

This tool is going to take in the path to the file to clean up. So let's specify that using zod:

Finally, we'll add a callback to return the value of the prompt. This comes back in a messages array, with a role of user and some text content.

server.prompt("cleanTranscription");

This means we've added a prompt template called clean transcription to our MCP server.

The Prompt

For those interested, here is the prompt I use:

const getPrompt = (path: string) =>`Clean up the transcript in the file at ${path}Do not edit the words,only the formatting and any incorrect transcriptions.Turn long-form numbers to short-form:One hundred and twenty-three -> 123Three hundred thousand, four hundred and twenty-two -> 300,422Add punctuation where necessary.Wrap any references to code in backticks.Include links as-is - do not modify links.Common terms:LLM-as-a-judgeReActReflexionRAGVercel`;

There are around two hundred common terms, so I've left them off for brevity.

The Demo

We can connect to this server from Claude Code using the same technique as we saw before.

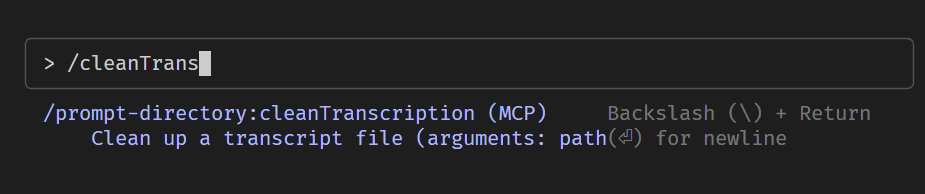

When we do, the prompt template is sourced as a command we can run from within Claude. We can access it by typing /cleanTranscription within the Claude Code interface. It shows the prompt via autocomplete:

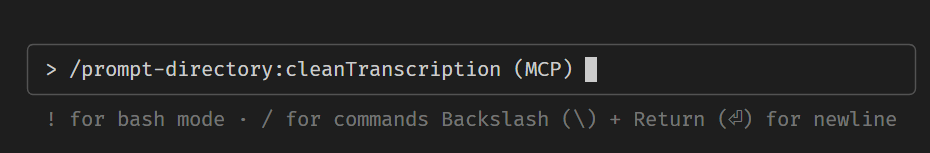

When we run it, we are prompted to add the path argument:

And it runs the prompt on the file.

Conclusion

This is a simple example of how you can use an MCP server as a prompt directory. You can provide your users with a set of prompts that they can use to drive the behavior of their LLM.

This is pretty powerful as a personal productivity tool, and also as a way to provide commands for your MCP clients for often-used workflows.